Introduction

The aim of this study was to compare the precision and accuracy of 6 imaging software programs for measuring upper airway volumes in cone-beam computed tomography data.

Methods

The sample consisted of 33 growing patients and an oropharynx acrylic phantom, scanned with an i-CAT scanner (Imaging Sciences International, Hatfield, Pa). The known oropharynx acrylic phantom volume was used as the gold standard. Semi-automatic segmentations with interactive and fixed threshold protocols of the patients’ oropharynx and oropharynx acrylic phantom were performed by using Mimics (Materialise, Leuven, Belgium), ITK-Snap ( www.itksnap.org ), OsiriX (Pixmeo, Geneva, Switzerland), Dolphin3D (Dolphin Imaging & Management Solutions, Chatsworth, Calif), InVivo Dental (Anatomage, San Jose, Calif), and Ondemand3D (CyberMed, Seoul, Korea) software programs. The intraclass correlation coefficient was used for the reliability tests. A repeated measurements analysis of variance (ANOVA) test and post-hoc tests (Bonferroni) were used to compare the software programs.

Results

The reliability was high for all programs. With the interactive threshold protocol, the oropharynx acrylic phantom segmentations with Mimics, Dolphin3D, OsiriX, and ITK-Snap showed less than 2% errors in volumes compared with the gold standard. Ondemand3D and InVivo Dental had more than 5% errors compared with the gold standard. With the fixed threshold protocol, the volume errors were similar (–11.1% to –11.7%) among the programs. In the oropharynx segmentation with the interactive protocol, ITK-Snap, Mimics, OsiriX, and Dolphin3D were statistically significantly different ( P <0.05) from InVivo Dental. No statistical difference ( P >0.05) was found between InVivo Dental and OnDemand3D.

Conclusions

All 6 imaging software programs were reliable but had errors in the volume segmentations of the oropharynx. Mimics, Dolphin3D, ITK-Snap, and OsiriX were similar and more accurate than InVivo Dental and Ondemand3D for upper airway assessment.

For the last century, the gold standard method for analysis of craniofacial development was cephalometry, with linear and angular measurements performed on lateral headfilms. However, as a 2-dimensional representation of 3-dimensional (3D) structures, lateral headfilms offer limited information about the airways. Information regarding axial cross-sectional areas and overall volumes can only be determined by 3D imaging modalities. Medical computed tomography is a 3D imaging modality used in medicine but not as a routine method for airway analysis because of its high cost both financially and in terms of radiation. These drawbacks were overcome with the introduction of cone-beam computed tomography (CBCT). CBCT is becoming a popular diagnostic method for visualizing and analyzing upper airways. Since its introduction in 1998, CBCT technology has been improved, with lower costs, less radiation exposure to patients, and better accuracy in identifying the boundaries of soft tissues and empty spaces (air). Furthermore, CBCT allows for the assessment of axial cross-sectional areas and volumes of the upper airways. The accuracy and reliability of CBCT for upper airway evaluation have been validated in previous studies, and the use of CBCT for airway evaluation has been reported in a systematic review of the literature.

The evaluation of the size, shape, and volume of the upper airway starts by defining the volume corresponding to the airway passages, a process called segmentation. In medical imaging, segmentation is defined as the construction of 3D virtual surface models (called segmentations ) to match the volumetric data. In other words, it means to separate a specific element (eg, upper airway) and remove all other structures of noninterest for better visualization and analysis. Upper airway segmentation can be either manual or semiautomatic. In the manual approach, the segmentation is performed slice by slice by the user. The software then combines all slices to form a 3D volume. This method is time-consuming and almost impractical for clinical application. In contrast, semiautomatic segmentation of the airway is significantly faster than manual segmentation. In the semiautomatic approach, the computer automatically differentiates the air and the surrounding soft tissues by using the differences in density values (grey levels) of these structures. In some programs, the semiautomatic segmentation includes 2 user-guided interactive steps: placement of initial seed regions in the axial, coronal, and sagittal slices, and selection of an initial threshold.

Image thresholding is the basis for segmentation. When the user determines a threshold interval, it means that all voxels with grey levels inside that interval will be selected to construct the 3D model (segmentation). Lenza et al reported the use of a single threshold value to segment the airway in each patient’s CBCT scan. This approach can generate errors, especially in volume analysis, but it is certainly more reproducible than the use of a dynamic threshold. However, there are few studies comparing the results of threshold filtering with different imaging software programs for airway assessment.

A growing number of software programs to manage and analyze digital imaging communications in medicine (DICOM) files are introduced in the market every year. Many of these have incorporated tools to segment and measure the airway. A systematic review of the literature reported 18 imaging software programs for viewing and analyzing the upper airway in CBCT. However, validation studies with a clear study design were performed in only 4 software programs. The systematic review suggested that studies assessing the accuracy and reliability of current and new software programs must be conducted before these imaging software programs can be implemented for airway analysis.

The aim of this study was to compare the precision and accuracy of 6 imaging software programs for measuring the oropharynx volume in CBCT images. The primary null hypothesis, that there are no significant differences in airway volume measurements among the 6 imaging software programs, was tested.

Material and methods

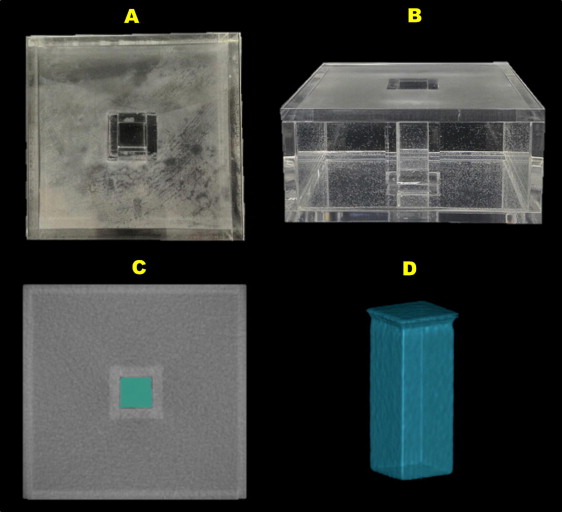

This study was approved by the ethical committee of Pontifical Catholic University of Rio Grande do Sul in Brazil. The records we used were obtained from the patient database of the Department of Orthodontics and consisted of the pretreatment CBCT scans of a preexisting rapid maxillary expansion sample. The sample included 33 growing patients (mean chronologic age, 10.7 years; range, 7.2-14.5 years) with transverse maxillary deficiency and no congenital malformations. Additionally, a custom-made oropharynx acrylic phantom with a known volume was used as the gold standard ( Fig 1 ). The oropharynx acrylic phantom consisted of an air-filled plastic rectangular prism surrounded by water. Water was the medium of choice because it has a similar attenuation value to soft tissue. The dimensions of the outer surface of the phantom were created to simulate the dimensions of a growing patient’s neck, and the rectangular prism to simulate the dimensions of the oropharynx. The oropharynx acrylic phantom’s dimensions were measured to the nearest 0.01 mm by using digital calipers (model 727; Starret, Itú, São Paulo, Brazil), and the volume was calculated multiplying the base area by the height. Additionally, the oropharynx acrylic phantom’s volume was confirmed by using the water weight equivalent. The oropharynx acrylic phantom was filled with distilled water, at 20°C, and the water weight was determined by using a digital scientific scale (model BG1000; Gehaka, São Paulo, São Paulo, Brazil).

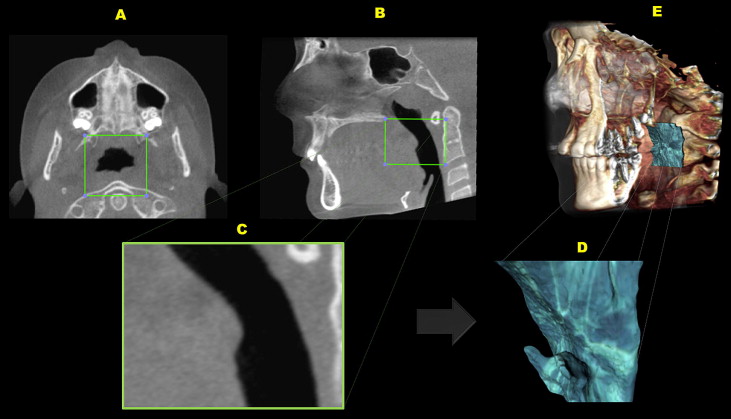

Patients and the phantom were scanned with the i-CAT scanner (Imaging Sciences International, Hatfield, Pa) set at 120 kVp, 8 mA, scan time of 40 seconds, and 0.3-mm voxel dimension. The images were reconstructed with a 0.3-mm slice thickness and exported as DICOM files. Any CBCT scans with artifacts distorting the airway borders were excluded from this study. CBCT scans were imported into OsiriX software (version 4.0; Pixmeo, Geneva, Switzerland) for head orientation and definition of the oropharynx’s region of interest. The head orientation was performed by using the palatal plane as a reference (ANS-PNS parallel to the global horizontal plane in the sagittal view and perpendicular to the global horizontal plane in the axial view). After head orientation, a tool in OsiriX (Vol cutter) was used to select and crop the oropharynx, according to the following references: superior limit, extension of the palatal plane (ANS-PNS) to the posterior wall of the pharynx; inferior limit, a plane parallel to the palatal plane that passes through the most anteroinferior point of the second cervical vertebrae ; anterior limit, a perpendicular plane to palatal plane that passes through the posterior nasal spine; posterior limit, posteriorly to the posterior wall of pharynx ( Fig 2 , A and B ). The regions of noninterest were excluded, and the new volume, containing only the oropharynx region, was exported as the new DICOM file, with the same voxel resolution but a smaller file size ( Fig 2 , C ).

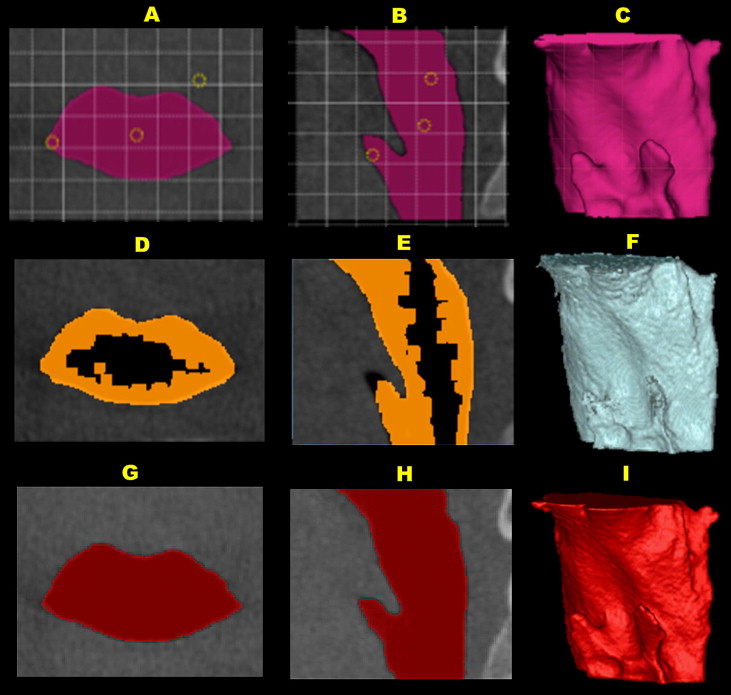

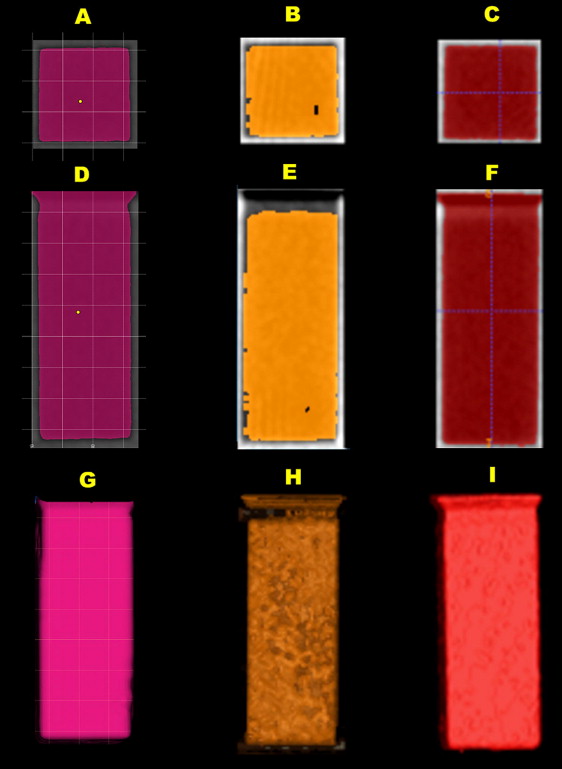

Six imaging software programs were used to segment and compute volumes from the CBCT images of the patients’ oropharynx and the oropharynx acrylic phantom ( Figs 3 and 4 ). The specifications of each software program are shown in Table I . The oropharynx and the oropharynx acrylic phantom segmentations were performed according to each software manufacturer’s recommendations, with 2 techniques for semiautomatic segmentation: (1) interactive threshold interval, as used in a previous study, and (2) fixed threshold interval. In the interactive thresholding, the operator selected the best threshold interval based on a visual analysis of the anatomic boundaries of the oropharynx and the oropharynx acrylic phantom in the axial, sagittal, and coronal slices. In the fixed threshold, the threshold interval was fixed (–1000 to –587 grey levels) to test the variability among the software programs. The segmentations with interactive thresholding were performed with Mimics (version 14.12; Materialise, Leuven, Belgium), Dolphin3D (version 11.7; Dolphin Imaging & Management Solutions, Chatsworth, Calif), Ondemand3D (version 1.0.9.1451; CyberMed, Seoul, Korea), OsiriX (version 4.0; Pixmeo), and ITK-Snap (version 2.2.0; www.itksnap.org ) (except InVivo Dental [version 5.0; Anatomage, San Jose, Calif]), and those with fixed thresholding were InVivo Dental, Mimics, Ondemand3D, OsiriX, and ITK-Snap (except Dolphin3D).

| Software | Description | Operational system | |

|---|---|---|---|

| Dolphin3D | Version 11.7; Dolphin Imaging & Management Solutions, Chatsworth, Calif | Windows | Not free |

| InVivo Dental | Version 5.0; Anatomage, San Jose, Calif | Windows | Not free |

| Ondemand3D | Version 1.0.9.1451; CyberMed, Seoul, Korea | Windows | Not free |

| Mimics | Version 14.12; Materialise, Leuven, Belgium | Windows | Not free |

| OsiriX | Version 4.0; Pixmeo, Geneva, Switzerland | Mac OS X | Free open source |

| ITK-Snap | Version 2.2.0; www.itksnap.org | Windows Mac OS X Linux |

Free open source |

Statistical analysis

The oropharynx and the oropharynx acrylic phantom segmentations and the volume analyses were performed by 1 investigator (A.W.). All measurements were made again 2 weeks later. The reliability of the first and second measurements was evaluated by intraclass correlation coefficient. A total of 680 segmentations were performed with the 6 imaging software programs. Values were imported into an Excel spreadsheet (Microsoft, Redmond, Wash).

The oropharynx acrylic phantom measurements with the 6 imaging software programs failed the Kolmogorov-Smirnov test for normality, so nonparametric tests were used. The oropharynx acrylic phantom segmentations were compared with the gold standard by using the Wilcoxon signed rank test for related samples. The oropharynx volume measurements of the 33 patients, with the 6 imaging software programs and the 2 threshold protocols (interactive and fixed), were normally distributed. Not all imaging software programs offered both interactive and fixed thresholds. A repeated measurements analysis of variance (ANOVA) test was used to compare the Mimics, Dolphin3D, Ondemand3D, OsiriX, and ITK-Snap software programs with the interactive threshold, and a second repeated measurements ANOVA test was used to compare the InVivo Dental, Mimics, Ondemand3D, OsiriX, and ITK-Snap software programs supporting a fixed threshold capability. The Mauchly’s test of sphericity showed that the assumption of sphericity was violated; the Greenhouse-Geisser correction was used to complement the ANOVA test. Additionally, post-hoc tests with the Bonferroni correction were used to compare the volume measurements between 2 imaging software programs. Statistical significance was established at α = 0.05. The statistical analysis was performed with SPSS software (version 12.0 for Windows; SPSS, Chicago, Ill).

Results

The oropharynx acrylic phantom was used as the gold standard by comparing the actual physical volume with the segmentation volume in the CBCT images. The oropharynx acrylic phantom’s physical volume was computed as 9.405 cm 3 with the formula: base area (235.13 mm 2 ) × height (40 mm). Additionally, the oropharynx acrylic phantom’s volume was measured by the water-weight technique. Considering the weight measured (9.40 g) and the standard density of water at 20°C (1 cm 3 of water, 0.9982 g), the volume calculated by the rule of 3 (volume = 9.4/0.9982) was 9.410 cm 3 . By using the average of the 2 methods, the oropharynx acrylic phantom’s actual physical volume (gold standard) was 9.407 cm 3 .

The method repeatability for oropharynx acrylic phantom segmentations was excellent. The intraclass correlation coefficient between the first and second measurements (mean volume) was 0.999. The Wilcoxon signed rank test showed statistically significant differences ( P = 0.005) between the medians of all 10 oropharynx acrylic phantom segmentation volumes and the gold standard. All 6 imaging software programs, with both interactive and fixed threshold protocols, underestimated the gold standard volume ( Table II ). When the interactive thresholding technique was used, Mimics, Dolphin3D, OsiriX, and ITK-Snap showed less than 2% error differences (underestimated) in volume calculation compared with the gold standard, whereas Ondemand3D and InVivo Dental showed more than a 5% errors compared with the gold standard ( Table II ). When the fixed threshold interval technique was used (–1000 to –587 grey levels), the software programs (InVivo Dental, Mimics, Ondemand3D, OsiriX, and ITK-Snap) had similar errors and underestimated the phantom volume by 11% compared with the gold standard ( Table II ).

| Software program | Segmentation volume mean (mm 3 ) | Gold standard volume (mm 3 ) | Difference (mm 3 ) | Difference (%) |

|---|---|---|---|---|

| Mimics | 9392 | 9407 | −15 | −0.2 |

| Dolphin3D | 9315 | 9407 | −92 | −1.0 |

| OsiriX | 9289 | 9407 | −118 | −1.3 |

| ITK-Snap | 9236 | 9407 | −172 | −1.8 |

| OnDemand3D | 8809 | 9407 | −598 | −6.4 |

| InVivo Dental | 8366 | 9407 | −1041 | −11.1 |

| Mimics FT | 8340 | 9407 | −1067 | −11.3 |

| OnDemand3D FT | 8344 | 9407 | −1063 | −11.3 |

| ITK-Snap FT | 8328 | 9407 | −1079 | −11.5 |

| OsiriX FT | 8310 | 9407 | −1097 | −11.7 |

The method repeatability for the patients’ oropharynx measurements was high (ICC >0.94) for all imaging software programs ( Table III ). When oropharynx segmentations were performed with interactive thresholding, the volume measurements with the 6 imaging softwares were statistically different (repeated measures ANOVA with the Greenhouse-Geisser correction: F = 8.3; P = 0.006). The descriptive statistical analysis showed higher oropharynx mean volumes for ITK-Snap (7174 mm 3 ), Mimics (7163 mm 3 ), OsiriX (7086 mm 3 ), and Dolphin3D (7071 mm 3 ), and lower mean volumes for InVivo Dental (6661 mm 3 ) and Ondemand3D (6061 mm 3 ) ( Table III ). According to the Bonferroni post-hoc paired comparisons, there were no statistically significant differences ( P >0.05) among ITK-Snap, Mimics, OsiriX, Dolphin3D, and Ondemand3D. However, the oropharynx volume segmentations with ITK-Snap, Mimics, OsiriX, and Dolphin3D were statistically significantly different from InVivo Dental. The comparison between InVivo Dental and OnDemand3D showed no significant difference ( Table IV ). When the fixed threshold technique was used, the ANOVA analysis with the Greenhouse-Geisser correction showed overall statistically significant differences (F = 30.7; P <0.001) in the oropharynx volume segmentation among all imaging software programs tested (InVivo Dental, Mimics, Ondemand3D, OsiriX, and ITK-Snap). The post-hoc paired comparisons also showed significant differences for most software pairs, with the exception of InVivo Dental, which had no significant difference compared with ITK-Snap, Mimics, and OsiriX with the fixed threshold technique. Also, there was no significant difference between ITK-Snap and OsiriX ( Table V ).

| Software | Volume measurement 1 (mm 3 ) | Volume measurement 2 (mm 3 ) | ICC | Mean volume (mm 3 ) | SD (mm 3 ) | 95% CI (lower and upper bounds) |

|---|---|---|---|---|---|---|

| ITK-Snap | 7271 | 7077 | 0.99 | 7174 | 607 | 5938, 8409 |

| Mimics | 7206 | 7119 | 0.99 | 7163 | 594 | 5952, 8373 |

| OsiriX | 7093 | 7079 | 0.99 | 7086 | 593 | 5878, 7086 |

| Dolphin3D | 7121 | 7021 | 0.99 | 7071 | 585 | 5879, 8263 |

| InVivo Dental | 6679 | 6644 | 0.99 | 6661 | 587 | 5465, 7858 |

| OnDemand3D | 6198 | 5924 | 0.94 | 6061 | 349 | 5350, 6772 |

| Mimics FT | 6753 | 6753 | 1.00 | 6753 | 583 | 5566, 7940 |

| ITK-Snap FT | 6616 | 6627 | 1.00 | 6622 | 577 | 5445, 7797 |

| OsiriX FT | 6646 | 6645 | 1.00 | 6645 | 575 | 5475, 7817 |

| OnDemand3D FT | 6881 | 6881 | 1.00 | 6881 | 585 | 5688, 8075 |

| Software (I) | Software (J) | Mean difference (I – J) (mm 3 ) | SE (mm 3 ) | Significance † | 95% CI for difference † | |

|---|---|---|---|---|---|---|

| Lower bound | Upper bound | |||||

| InVivo | ITK-Snap | −512 ∗ | 53 | .000 | −682 | −343 |

| Dolphin3D | −410 ∗ | 51 | .000 | −573 | −247 | |

| OsiriX | −425 ∗ | 46 | .000 | −570 | −279 | |

| Ondemand3D | 600 | 364 | 1.000 | −555 | 1755 | |

| Mimics | −501 ∗ | 43 | .000 | −638 | −364 | |

| ITK-Snap | InVivo | 512 ∗ | 53 | .000 | 343 | 682 |

| Dolphin3D | 102 | 57 | 1.000 | −78 | 283 | |

| OsiriX | 88 | 53 | 1.000 | −82 | 258 | |

| Ondemand3D | 1113 | 378 | .090 | −86 | 2311 | |

| Mimics | 11 | 44 | 1.000 | −128 | 151 | |

| Dolphin3D | InVivo | 410 ∗ | 51 | .000 | 247 | 573 |

| ITK-Snap | −102 | 57 | 1.000 | −283 | 78 | |

| OsiriX | −15 | 46 | 1.000 | −159 | 130 | |

| Ondemand3D | 1010 | 365 | .139 | −146 | 2167 | |

| Mimics | −91 | 41 | .517 | −222 | 40 | |

| OsiriX | InVivo | 425 ∗ | 46 | .000 | 279 | 570 |

| ITK-Snap | −88 | 53 | 1.000 | −258 | 82 | |

| Dolphin3D | 15 | 46 | 1.000 | −130 | 159 | |

| Ondemand3D | 1025 | 363 | .122 | −126 | 2176 | |

| Mimics | −76 | 29 | .183 | −167 | 15 | |

| Ondemand3D | InVivo | −600 | 364 | 1.000 | −1755 | 555 |

| ITK-Snap | −1113 | 378 | .090 | −2311 | 86 | |

| Dolphin3D | −1010 | 365 | .139 | −2167 | 146 | |

| OsiriX | −1025 | 363 | .122 | −2176 | 126 | |

| Mimics | −1101 | 365 | .075 | −2259 | 56 | |

| Mimics | InVivo | 501 ∗ | 43 | .000 | 364 | 638 |

| ITK-Snap | −11 | 44 | 1.000 | −151 | 128 | |

| Dolphin3D | 91 | 41 | .517 | −40 | 222 | |

| OsiriX | 76 | 29 | .183 | −15 | 167 | |

| Ondemand3D | 1101 | 365 | .075 | −56 | 2259 | |

Stay updated, free dental videos. Join our Telegram channel

VIDEdental - Online dental courses