Evidence-based health care seeks to base clinical practice and decision-making on best evidence, while allowing for modifications because of patient preferences and individual clinical situations. Dentistry has been slow to embrace this discipline, but this is changing. In the Graduate Periodontology Program (GPP) of the University of Kentucky, an evidence-based clinical curriculum was implemented in 2004. The tools of evidence-based health care (EBHC) were used to create evidence-based protocols to guide clinical decision-making by faculty and residents. The program was largely successful, although certain challenges were encountered. As a result of the positive experience with the GPP, the college is implementing a wider program in which evidence-based protocols will form the basis for all patient care and clinical education in the predoctoral clinics. A primary component of this is a computerized risk assessment tool that will aid in clinical decision-making. Surveys of alumni of the periodontal graduate program show that the EBHC program has been effective in changing practice patterns, and similar follow-up studies are planned to assess the effectiveness of the predoctoral EBHC program.

Evidence-based medicine (EBM) has been described as “the conscientious, explicit, and judicious use of current best evidence in making decision about the care of individual patients.” One of the defining characteristics of EBM is that formal processes are used for assessing the reliability of the clinical evidence used to inform clinical decisions. The drivers of the EBM movement have been the need for reliable information by busy clinicians, the shortcomings of traditional sources of information (eg, textbooks), the availability of online databases that serve as repositories of this information, the limited time available to physicians to access information, and the electronic technology that has made searching the databases a simple matter. Another enabling factor is the maturation of clinical epidemiology as a discipline and its application to decision-making in health care. Currently pressure has increased to improve patient outcomes, reduce medical errors, make health care more accessible, and reduce medical expenses. Ample evidence shows that medical decision-making is often inconsistent and not based on best evidence or practices. EBM may help alleviate these problems and contribute to improving the quality and consistency of health care.

After a slow start, EBM is beginning to exert a profound effect on health care. Dentistry has been slower than medicine to adopt evidence-based strategies, but this is changing. Recently, the American Dental Association (ADA) held two evidence-based dentistry (EBD) conferences to promote this concept. The ADA hopes to create a cadre of “EBD Champions” who will, it is hoped, return to their communities or institutions and promote EBD and its many benefits. Because the conceptual foundation and tools of EBM and EBD are the same, this article uses the more inclusive term evidence-based health care (EBHC) to refer to these concepts.

EBHC consists of five steps, which have been described in greater detail elsewhere:

- 1.

Convert the need for clinical information into an answerable question

- 2.

Find and rank the best evidence with which to answer the question

- 3.

Critically appraise the evidence for validity, impact, and applicability

- 4.

Integrate this evidence with clinical expertise and the patient’s unique circumstances and preferences

- 5.

Evaluate effectiveness and efficiency in executing steps 1 through 4

The first step is to convert the clinician’s need for information into an answerable question. This question may be more “background,” which is concerned with a gap in general knowledge about a condition, diagnostic test, or intervention. A dental example might be “Does oral bisphosphonate therapy have an effect on implant survival?” Questions concerning the specific management of a patient may be termed foreground questions . For example, one might ask, “In a 45-year-old female smoker with osteoporosis, what is the risk for implant loss?” The relative need for background or foreground information varies with clinical experience. When dentists have little experience with the condition of interest, more background information is required, which is the situation for third-year medical or dental students (or experienced clinicians who are confronted with an unusual clinical condition). When dentists have more experience, they are more likely to require focused information relating to specific patient management issues (eg, the situation faced by a second-year resident or an experienced clinician).

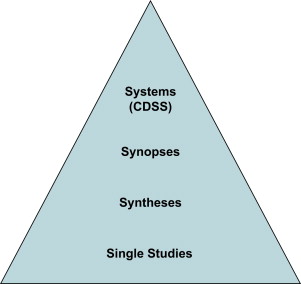

The second step is finding the best evidence with which to answer this question and then ranking it according to the hierarchy of evidence shown in Fig. 1 . Individual studies are at the bottom of this pyramid, whereas databases or repositories in which syntheses of evidence may be located (eg, the Cochrane Library) are somewhat higher. Synoptic journals are next, followed by computerized clinical decision support systems, which are capable of assimilating the data for a given patient and suggesting interventions (or further diagnostic tests) based on best evidence.

Ideally, there would be a seamless link between the clinical decision support system and the patient’s electronic health record, so that suggestions regarding the patient’s diagnosis and treatment would be made in real time as data is entered into the chart. This system would be automatically updated with the latest best evidence as it became available. Obviously, these systems are not in general use, but the soundness of this approach has been shown, particularly in the area of prescribing multiple medications for hospitalized or geriatric patients. To date, the applications with the most immediate usefulness have been those that promote guideline adherence and alert providers to drug interactions or treatment omissions. Examples include computer provider order entry systems combined with clinical decision support systems, which have been shown to improve patient safety through reducing the incidence of adverse drug interactions.

The third step of the classical EBHC process is critical appraisal of the evidence. Evidence must be screened for validity, impact, and clinical applicability. Of particular interest is the concept of external validity . A study is said to possess external validity when it can provide “unbiased inferences regarding a target population (beyond the subjects in the study).” This finding is a function of the study population vis-à-vis the research hypothesis. If a study on melanoma risk factors recruited only Caucasian women, the conclusions generated may not apply to African American men, who experience a much lower incidence of this cancer. Populations differ and these differences may have an important effect on study outcomes.

Another example of this population difference is the prevalence of a genotype associated with severe periodontitis. The genotype is present in approximately 30% of individuals of northern European descent, but a study in a Chinese population found a prevalence of only 2.3%. These differences must be considered when extrapolating the results of a study to a more general (or different) population than the study sample.

The impact of the evidence can be measured by the size of the treatment effect for therapeutic interventions. This effect can be reported in a number of ways, depending on the intervention. For example, the relative risk reduction is useful in describing the protective effect of a therapy (eg, fluoride varnish). For other types of interventions, a relative benefit increase may be more appropriate. One particularly useful concept is the number needed to treat, which is the number of patients that must be treated with the therapy in question to prevent one additional bad outcome (however that is defined).

The impact of a diagnostic test can be assessed through examining the sensitivity, specificity, positive predictive value, and negative predictive value. Finally, the intervention must be clinically applicable to each unique patient. This critical appraisal is at the heart of the EBHC concept and is, to a large extent, what Straus and colleagues refer to as “doing” EBHC. The basic process is often referred to as “critical thinking” in academic health care, and it is a critical competency for clinicians, regardless of discipline.

EBHC has begun to appear in the dental curriculum. These EBHC experiences often consist of sending students to the library, where they conduct searches of the primary literature on some assigned topic and then critique the papers found. These worthwhile exercises are performed with the laudable goal of fostering “critical thinking.” Students are assumed to carry these skills into practice and used to improve patient outcomes.

Unfortunately, busy clinicians have insufficient time to perform lengthy analyses of the primary literature. Lack of time is cited as one of the drivers of the EBHC movement. Sackett and colleagues originally envisioned EBHC as a quick and efficient way to access best evidence and found that making evidence readily available increased the likelihood that this evidence would be used in making clinical decisions.

Obviously, critically analyzing one randomized controlled trial is an intellectually valuable exercise for health care students. As valuable as this exercise is, however, it is incomplete because it does not really give students a good model for incorporating EBHC into clinical practice. What is needed is quick access to robust information, such as that supplied by systematic reviews or meta-analyses like those found in the Cochrane Library or similar sources. Only when these reviews are not available should a search of the primary literature be required. Unfortunately, most clinicians will have neither the time nor the inclination to perform their own reviews (and may be hard-pressed to conduct searches for even single articles).

In this regard, a useful distinction might be made between “doers” of EBHC and “users” of EBHC. Straus and colleagues point out that clinicians may shift back and forth between the “using” and “doing” modes. They note that “while some clinicians may want to become proficient in practicing all five steps of EBHC, many others would instead prefer to focus on becoming efficient users (and knowledge managers) of evidence.” Although students of all health care disciplines must be competent in both “using” and “doing,” it seems that they must at least be able to access and use best evidence, which involves different tools and a different approach than “doing” EBHC. University of Kentucky College of Dentistry (UKCD) requires students to be competent in both modes, but has emphasized the “using” mode in clinical protocols and education, because they believe it to be the mode most likely to be used by most graduates.

A small group at the UKCD became interested in using EBHC principles to enhance curriculum and clinical services. This article presents a brief review of UKCD’s initial experiences in implementing this initiative. Although they are in the process of creating a predoctoral clinical curriculum based on the concepts of EBHC, much of their experience has been in the Graduate Periodontology Program. The goal was to fully and seamlessly integrate EBHC into the clinical curriculum. The idea was to make it truly an organic part of the clinical and educational missions of the college, because they believed this was the best way to show the usefulness of EBHC to skeptical students and faculty.

The goal was to use the principles and tools of EBHC to guide clinical decision making through the creation of evidence-based treatment protocols for commonly encountered clinical situations. This information was to be incorporated into didactic coursework and applied in the clinical setting. These clinical protocols were to be easily accessed by students, faculty, and staff. This represents a more holistic and integrated form of EBHC than an individual course devoted to EBHC (although the two approaches are not, of course, mutually exclusive).

Korenstein and colleagues reported a similar program at Mount Sinai School of Medicine, in which EBHC is fully integrated into clinical curricula, including women’s health and addiction medicine. The programs have been well received and plans call for expanding this approach into other areas of the curriculum.

Teaching evidence-based health care

One challenge confronting clinical teachers is how best to incorporate EBHC into the curriculum. Three primary modes of teaching EBHC exist:

- 1.

Role-modeling evidence-based practice

- 2.

Using (and making explicit) the use of evidence in clinical teaching

- 3.

Teaching the skills of EBHC (“doing” EBHC)

The first is the practice of EBHC so that it is seamlessly integrated into the care of patients. The second mode involves learning EBHC principles through integrating research evidence into clinical and didactic teaching so that students can see the evidentiary foundation of their education. The third mode involves teaching the skills of “doing” EBHC, wherein students learn to find and critically appraise the evidence. This step is necessary to develop critical thinking skills and, as importantly, give students the tools needed for lifelong learning.

Efforts have been strongly influenced by those of Sackett, Straus, and their colleagues. Their experience in implementing EBHC into their clinic is extensive and their insights into what makes for effective (and ineffective) EBHC teaching are invaluable. They have identified several factors associated with success when teaching EBHC:

- 1.

Process is based on clinical decisions

- 2.

Focus is on learners’ actual learning needs

- 3.

Passive and active learning are both used in a balanced manner

- 4.

What is already known is connected with “new” knowledge

- 5.

Teacher is explicit about appraisal of evidence

- 6.

EBHC is seamlessly integrated into patient care decisions

- 7.

Provides a foundation and tools for lifelong learning

They suggest that EBHC teaching success occurs when the EBHC process is based on real clinical decisions, focuses on learners’ actual learning needs, and connects what is already known by the student with the new knowledge. It is also important that the teacher makes explicit how to appraise the evidence and apply it to a patient’s care in an integrated manner, thus seamlessly incorporating EBHC into the clinical experience. This latter concept has been the guiding principle in attempts to restructure the clinical curriculum.

However, they also discuss some of the mistakes they have made ( Box 1 ), which include emphasizing how to “do” research over how to “use” research findings. EBHC should also be seen as a method to not only find flaws in the published literature but also identify evidence that can provide therapeutic guidance. If EBHC sessions become exercises in which no evidence is seen as valid, the result is a “therapeutic nihilism” in which the teacher is reluctant to recommend any treatment. Evidence-based care often provides a bias toward rational action, not therapeutic nihilism. Students must learn not only to critique the literature for errors but also obtain useful information that may be used in caring for their patients.

- 1.

Emphasize how to do research rather than how to use research findings to inform clinical practice

- 2.

Emphasize how to perform statistical analyses rather than how to interpret the results of such analyses

- 3.

Find only flaws in the published literature, rather than also trying to find information that offers clinical guidance

- 4.

Cast EBHC as a substitute for the clinician’s judgment and skills rather than as a complement to them

- 5.

Disconnect the EBHC process from the clinical process and the team’s need for clinical information

- 6.

Exceed the amount of time available and/or the teams’ attention span

- 7.

Humiliate the learner for not knowing some bit of clinical information

- 8.

Strive for full educational closure by the end of the session, rather than leaving some aspects unresolved to encourage the student to think and learn between sessions

FLOAT NOT FOUND

One other mistake is the assumption that learning occurs only during formal course sessions. Clinical learning should occur in the context of patient care, which is why the authors have chosen to attempt to fully integrate EBHC within their clinical teaching and patient care missions. Only then will students see EBHC for what it is: a practical tool for clinical practice, rather than a sterile academic exercise.

The graduate periodontology experience

The first academic unit of the University of Kentucky to implement a comprehensive evidence-based curriculum was the Graduate Periodontology Program (GPP). Consequently, most of the authors’ experience has been in this program. In 2000, work was begun on a set of clinical protocols that were developed according to the principles of evidence-based medicine ( Table 1 ). Whenever possible, systematic reviews and meta-analyses were used, but when these were unavailable, primary literature searches were undertaken. Studies with multiple research hypotheses, small sample sizes, and other deficiencies were not assigned the same importance as systematic reviews and meta-analyses. These protocols were collected into a manual, which is provided in both hard-copy and online formats. The contents of the manual have been used to inform teaching, research, and service in the program. When insufficient high-quality evidence existed, the choices became more difficult.

| Parameter | Details |

|---|---|

| Condition | Chronic periodontitis of early-advanced severity |

| Patient | Any |

| Intervention a | Initial therapy (to consist of SRP/subgingival debridement, oral hygiene assessment and instruction, smoking cessation (if appropriate), extraction of hopeless teeth (see appropriate protocol – or these might be listed here [eg, class III mobility/depressible, radiographic bone loss > 75%]) Initial therapy must always be followed by a formal reevaluation (ie, comprehensive periodontal examination) 4 to 8 weeks following last session of scaling and root planning (SRP) As an alternative to conventional SRP, a full-mouth debridement can be carried out in two appointments over 24 to 48 hours |

| Compared with | Conventional initial therapy vs. full-mouth debridement vs. coronal scaling and polishing |

| Outcome | Initial therapy effective, although coronal scaling and polishing is not (in terms of improving surrogate indices). In patients with chronic periodontitis in moderately deep pockets slightly more favorable outcomes for pocket reduction and gain in probing attachment were found following FMD compared with control. |

| Recommendation | Diagnosis of chronic periodontitis requires initial therapy. FMD may provide a slight additional benefit in some cases |

| Sources | Eberhard J, Jepsen S, Jervøe-Storm PM, et al. Full-mouth disinfection for the treatment of adult chronic periodontitis. Cochrane Database Syst Rev 2008;(1):CD004622; and |

| Hung HC, Douglass CW. Meta-analysis of the effect of scaling and root planing, surgical treatment and antibiotic therapies on periodontal probing depth and attachment loss. J Clin Periodontol 2002;29(11):975–86. | |

| Strength of evidence | High |

| Review date | September 2008 |

| Author(s) | M. V. Thomas |

Stay updated, free dental videos. Join our Telegram channel

VIDEdental - Online dental courses